Activism-based rather than evidence-based

A recently published study in the prestigious journal "Nature - Human Behavior" claims that Republican 'anti-trans' legislation causes upticks in suicide attempts by 7-72 %. It bursts with flaws.

This article has grown quite detailed, so I've summarized the key insights in the bullet points below. For those interested in further details, feel free to explore the relevant sections. As a Ph.D. student in empirical economics with over five years of experience in causal estimation methods and data analysis, I feel well-positioned to offer some general observations on this study. You can find the link to the study in the footnotes1

The Article in Short:

The Study in General:

Influence of Study on Media and Activism: The study has garnered significant attention from left-leaning media and trans activists, who claim it provides causal evidence that anti-trans legislation leads to increased suicide rates (7-72 % increase) among trans youth, framing any opposition to gender-affirming care as promoting child suicide.

Questionable Data and Estimates: Despite the study's claims of precision due to its large sample size, it relies on flawed data and unreasonable estimates, yet it is being used by activists and media to push a narrative and emotionally pressure those questioning gender ideology's impact on children.

Empirical Analysis: The Devil is in the Details

Methodology: The study uses the difference-in-differences approach, common in social science, to compare states (Republican vs. Democrat) before and after "anti-trans" laws to assess causal effects.

Data Sources: The researchers reference the ACLU's tracker to define which states enacted "anti-trans" legislation, but do not provide a clear breakdown of treatment and control groups. (Hence, I replicated it for you)

Limited Observation Period: Most data cover only one period after laws were passed, with only Idaho as a treatment group initially, expanding to more states in later waves. Relevant treatment group consists of TGNB youth in Idaho, Arkansas, Mississipi, Montana, West Virgina, North Dakota.

Small Sample Sizes in Treatment Group: The results showing increases in suicide attempts rely on very small sample sizes in the treated states (as few as 38-108 individuals per state), casting doubt on the reliability of the findings.

The Data: The problems with convenience sampling

Convenience Sampling Bias: The study uses convenience sampling through social media platforms, leading to potential selection bias and overrepresentation of dedicated or activist respondents, which may not reflect the broader TGNB youth population.

Survey Design and Respondent Capability: Concerns are raised about the ability of young adolescents, especially 13-year-olds, to accurately complete a lengthy 150-question survey on complex topics like mental health and socioeconomic issues.

Statistical Discrepancies: The study's suicide attempt data shows inconsistencies, with a small number of extreme responders likely inflating the overall figures, questioning the validity and generalizability of the findings.

Ethical and Verification Issues: The study lacks necessary ethical safeguards for minors reporting multiple suicide attempts and does not include robustness checks with external data sources to verify its alarming findings.

An Old Trick: Don’t mention any inconsistent findings in the abstract

Superficial Media Coverage: Journalists often only read abstracts or introductions, leading to incomplete understanding and propagation of potentially flawed research findings into public discourse and policy.

Contradictory Results in Suicide Data: The study reports conflicting outcomes, showing an increase in suicide attempts but a decrease in suicidal ideation following the passage of anti-transgender legislation, which raises questions about the validity of the findings.

Insufficient Explanation for Discrepancies: The authors attempt to resolve the contradiction by citing technical details about pre-treatment trends, but this explanation fails to adequately reconcile the opposing findings.

The ‘anti-trans’ legislation in question: Separating sports according to sex leads to suicide. Really?

Misleading Focus on Legislation: The study's introduction focuses on laws banning gender-affirming care, but the majority of the 48 laws cited are related to sports participation based on biological sex, not gender identity, which are claimed to increase suicide attempts.

Exaggerated Suicide Statistics: The study suggests that these laws lead to a 7-72% increase in suicide attempts, but the extreme estimates appear clinically unreasonable and unsupported by official statistics on youth suicides.

Lack of Supporting Data: The authors fail to corroborate their alarming suicide attempt statistics with other reliable sources, such as national suicide data or comparisons to other studies on TGNB youth suicidality.

Questionable Academic Integrity: There is concern that the authors, journal editors, and peer reviewers neglected their responsibility to rigorously validate these extreme claims, potentially allowing biased or incomplete findings to go unchecked.

Incomplete literature review

Lack of Engagement with Relevant Literature: The authors fail to cite recent studies that offer a broader perspective on suicidality in gender-distressed youth, despite the availability of significant research from countries like the UK, Canada, and the Netherlands.

Suicidality Rates Comparable to Other Mental Health Issues: Research shows that while suicidality rates are higher among gender-distressed youth compared to the general population, they are similar to those of young people with other mental health comorbidities.

Distinction Between Suicide Attempts and Death by Suicide: The paragraph emphasizes the need to distinguish between suicide attempts and actual suicide mortality, as studies like the Cass Review show completed suicides are rare and often linked to other mental health complexities.

No Evidence Supporting "Life-Saving" Claims of Pro-Trans Policies: The authors' assertion that pro-trans policies, such as gender-affirming care for minors, are life-saving is criticized for lacking evidence, as highlighted by the Cass Review.

Confirmation Bias

Conflict of Interest and Bias: All authors are affiliated with the Trevor Project, an advocacy group promoting gender-affirming care, raising concerns about potential bias in their research, especially since the organization pushes claims based on flawed studies.

Confirmation Bias in the Study: The study’s significant flaws, combined with the failure to acknowledge them, suggest that the research was driven by confirmation bias rather than a neutral pursuit of truth, calling into question its objectivity.

The Main Article

The Study and the Media’s Furore

“We knew if we were able to show this, it would be a breakthrough for using definitive scientific evidence to support calls for protective and affirming policies for trans and nonbinary young people and to help, ultimately, save their lives.” - Dr. Ronita Nath ( head of Research at the Trevor Project)

It has produced the headlines, it was intended to. Once published, large (left-leaning) media outlets such as CNN, NBC, Times Magazine and Vice on the one hand and widely known trans advocates like Jack Turban finally think they found causal evidence for what trans rights organizations and activists have been claiming for years. Any law that prioritizes biological sex over gender identity or gatekeeps so-called gender medicine, will lead to the death of trans people. And to make the claim more painfully heard in people’s hearts and minds, it’s about ‘trans children.’

The study in question surveyed people between the ages of 13-24 who identify as transgender or non-binary (in what follows, referred to as TGNB) in all US states and compared individual-specific outcomes such as suicide attempts, suicidal ideation and some socio-economic outcomes such as unemployment in states that have passed so-called anti-transgender legislation (Republican-led states) to said outcomes in states not having passed such laws (Democrat-led states). It claims that relative to ‘trans-friendly’ states, suicide attempts of TGNB youth in Republican states have increased by 7-72 % in the years following the enactment of what some organizations coin ‘anti-LGBTQ+’ bills. And because in the years previous to the laws being passed, there is no discernible difference in suicide attempts in Republican vs. Democrat states, the increase in suicide attempts is causally linked to the legislation in question.

To cut a long article short: The study claims a level of precision and rigor that no previous trans-related study had. Particularly because it does not use the ridiculously small sample sizes and poor statistical analyses which made big medical associations around the world fall for gender affirming care withina second but can rely on tens of thousands of respondents. But the way the data was gathered is hugely problematic and given the type of legislation in question, its main results appear unreasonable. Nevertheless, it was persuasive enough for some media outlets to circulate its main findings uncritically. Hence again, anyone questioning the influence of gender ideology on children is confronted with another barrage of emotional blackmail: Those who raise concerns are indifferent to child welfare or are advancing an agenda that could harm vulnerable youth. The big danger is that it likely will be used in future policy discussions around trans issues and given its more subtle flaws than previous research, direct rebuttals will be more difficult. This article should serve as a pool of arguments, you can always refer to when someone cites the study.

Digression: What is causality actually?

Causal evidence is a buzzword in social science. Having gone through years of rigorous and often frustrating empirical research courses, it is a word that should only be used in excellent and very cleanly identified studies. Causality is also a mysterious word and often times nobodoy really knows what it means. So, here comes a quick description. In short, causality means that changing some policy X causes a change in outcome Y. It is distinct from the concept of correlation which simply states that when X changes, you notice an associated change in Y. However, this might be simply by coincidence. For instance, there is an old myth that storks bring babies and if you were to get data on the number of babies born and the number of storks in a geographic region, you might easily find a positive correlation. However, this is certainly not a causal relationship and might be simply be driven by the fact that storks live in more urban areas, where unsurprisingly, more babies are born. To establish causality between two phenomena is no easy undertaking and one that most social science researchers spend their whole careers on.

A causal relationship is established between X and Y, when after having controlled for all confounding factors, we still see a statistically robust relationship. In reality, this is almost impossible a task because confounding factors are ubiquitous and we can hardly include all of them (often because it cannot be measured or nobody has collected the data). Randomized experiments or randomized control trials are a way out and represent the gold standard of causal inference. The simple reason is that we randomly assign treatment (not a medical term per se, it can also simply mean a policy intervention of any kind) and this randomization ensures that confounders are irrelevant. Think of a pharma company that wants to bring a new cancer drug to the market and has to prove its efficacy. Therefore, they randomly give some cancer patients the drug (constituting the treatment group) and some cancer patients a placebo (control group). Under randomization, individual factors such as a patient’s other health conditions, their nutrition, or level of exercise should be comparable in both the treatment and control group. If there are statistically significant positive differences between treatment and control groups in patient health, the drug causally improves cancer outcomes.

In most social science settings, such as the proposed relationship between ‘anti-transgender’ laws and suicide attempts, randomized controlled experiments are neither ethical nor feasible. As a result, social science researchers rely on what they call ‘natural experiments.’ That is, arbitrary events that happen in the real world and somehow randomly divide populations into treatment and control are used to study the causal effects of policy interventions. A famous example from economics is a study by Card (1993) 2 who wanted to study whether (higher) minimum wages lead to more unemployment. He used a reform in which the state of New Jersey introduced higher minimum wages while Pennsylvania did not. He compared employment just across the two state borders before and after the reform. This leads me to discuss the first point of the study in question: The empirical method.

The Empirical Method: The devil is in the details

The researchers in the study on ‘anti-transgender laws’ use a method that has become incredibly widespread in social science research. It’s called difference-in-differences and its appeal lies in widespread applicability and intuitive nature. It is used to verify causal effects using in settings with multiple periods. The intuition is that some units (the treatment group, in this case, Republican states) implement a policy at some point in time whereas there is no such policy implemented in the control group (here Democrat states). In the time before the treatment is implemented, you would like to see the outcomes such as attempted suicide evolve in a parallel manner, and after the treatment is implemented outcomes diverge. If that is the case, social science researchers would speak of a policy having a causal effect. In principle, the setting of this study lends itself well to such an approach and is the least problematic aspect of this study. The graph below illustrates the intuition of this method quite well:

The complicating factor in this study is that the composition of the treatment and control group is not straightforwad. This is mainly due to states passing ‘anti-trans’ laws at different points in time. Hence, the control group changes over time and this has implications for the interpretation of the results. As its usual in such studies, authors would typically provide a table which state was treated when so that readers understand how treatment and control groups are made-up. They rather refer to the ACLU’s tracker of’ anti-LGBTQ’ legislation by American states. So, to fully understand what treatment and control group look like, I went to the website myself and followed their way of constructing the treatment sample. 19 states passed such legislation but they kicked out 4 states due to data issues. I came up with the following table showing the 15 state legislatures having enacted so-called ‘anti trans’ bills:

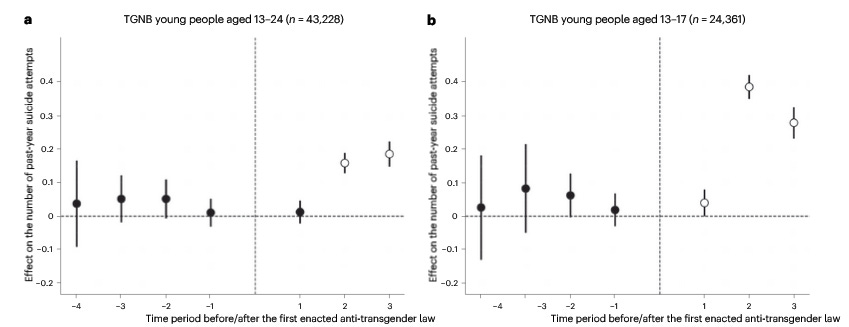

The authors consider a state’s TGNB youth being targeted if a law was passed in the legislative before the recruitment period. Arizona for instance enacted its laws inbetween wave 4 and 5 and hence we observe outcomes for TGNB youth in Arizona in the last wave (1 data point). Because the bulk of the legislation has been passed in this area we also observe outcomes, such as suicide attempts, only for one period after the law has been passed. In wave 3, the treatment group just consists of Idaho and the control group is all the rest, whereas the treatment group grows in the subsequent states by all those with a “TREATED” entry. Now look at the main outcome graphs of the paper:

This is what, if you’re lucky, your results look like using the empirical method in question. You normalize treatment (enacting of legislation) at some period 0 (where the vertical horizontal line is), you see points hovering around the horizontal line before that and a divergence from that line afterwards. Notice however, that they use 3 time periods after enactment of the laws which means that for the period 3 estimate, we actually only use the data of Idaho vs. those states who have not passed any law and for period 2, it is Idaho, Arkansas, Mississipi, Montana, West Virgina and North Dakota. Only for period 1 after the vertical line, we actually use all the Republican-led states having passed legislation. Now because, in 3 out of 4 graphs, the supposed effect is really not there in period 1, all the increases in suicide attempts that made it into headlines come from average annual sample sizes of 104 (Idaho), 108 Arkansas, 58 (Mississipi), 59 (Montana), 81 (West Virgina), 38 (North Dakota). It is unlikely that such few observations in relation with the non-random nature of the data (see next section), deliver any meaningful insights.

The Data: The problems with convenience sampling

A key feature of reliable and sound empirical research is high-quality, ideally random draws of data. In ideal settings, we want to work with representative samples of a population we want to study. That is, if we want to look at the effects of Republican state laws, we would ideally observe a randomly drawn subset of TGNB youth. Such a sample would be representative, thus allowing valid conclusions about the overall TGNB youth population overall. This study, however, relies only on samples created through convenience sampling. Basically, convenience sampling is sending an invite to your friends and co-workers, asking them to complete a survey, forwarding it to your friends asking them to complete it and further forward it. In this study, the authors use multiple ‘waves’ of TGNB youth surveys (meaning asking the same questions on samples at different points in time) initiated by the advocacy group Trevor Project. Survey participants were recruited via targeted advertisement on Facebook, Instagram and Snapchat. This can create a snowball effect in which a very interested and dedicated individual might forward the link to similarly dedicated friends and ultimately we learn something about very dedicated transidentified adolescents but very little about the general population of trans-identified adolescents (selection bias). Individuals were asked up to 150 questions ranging from mental health to socioeconomic questions. Although some restrictions were put in place to prevent some obvious problems such as people being able to fill out the survey multiple times, these are only minor cosmetic changes to a data source so hopelessly flawed.

Convenience sampling is the very opposite of representativeness because it creates a sample in which certain individuals are overrepresented. In this case, you have to be on one of these social media apps and you have to be very dedicated to answer 150 sensitive questions about your overall mental health status. Also, it is very questionable whether 13-year-olds are mentally capable of filling out 150 questions on very complex topics. The authors did not show any distribution of the age profile of the respondents and in principle, it could be a lot of 24-year-olds and very few 13-year-olds having completed the survey. How could we learn anything about your average ‘trans youth’ in such a scenario? Hypothetically, some very dedicated trans activists could have filled out the survey strategically answering in the most extreme way to skew the results. Typically, researchers would tailor the data based on observed characteristics such as age, income or sex to make a convenience sample resemble more a representative sample. Moreover, the non-random nature of the data could manifest itself differently in the treatment and control group meaning that the sample in California might systematically be different (age structure, economic outcomes) from the one in Wyoming. To counter such concerns, researchers would normally provide balancing tables showing that treatment and control group are similar along other observable data characteristics. The authors in this study did no such thing.

Although the authors acknowledge the non-random nature of their data, they do very little to verify any of the results using reliable data. If you find dramatic upticks in suicide attempts, the next best thing would be to conduct robustness checks and try to obtain any corroborating evidence on increased suicide rates (such as hospital records or upticks in psychological counselling or any other verified data which either shows or is correlated with increased suicide attempts). Even showing Google Trends of news reports about suicide attempts in Republican-led states would be important pieces of evidence.

Another interesting point to look at the suicide attempts statistics a bit more closely. In the overall sample, they find that the average number of suicide attempts is 0.420 for the whole sample and 0.539 for the 13-17 year olds. That means, that for 100 people randomly drawn, there are 42-54 suicide attempts. However, in their 2022 National Survey on LGBTQ Mental Health, they tell that only between 12 - 22 % (depending on whether non-binary or transgender and the target sex) of TGNB youth actually attempted suicide3 (20 % in 2021) at all. That implies that a minority of respondents is responsible for a lot of suicide attempts. Coming back to the hypothetical 100 respondents and taking 12 % (22%) at face value, this would mean that 12 (22) attempt suicide at all. However, we record 42 suicide attempts for the whole sample which implies that these 12 (22) have attempted suicide 3.5 (1.9) times. Even more troublesome, for the 13-17 year olds, these numbers shoot up to 4.5 (2.5). It seems that the remarkable findings underpinning this story are driven by very extreme responders and raise serious questions about those participants’ reliability. When you attempted suicide 4.5 times in the previous year, you are either trolling the survey and the results are rubbish or you are so mentally unwell that it is unlikely to truthfully answer up to 150 questions about very difficult topics. Not to mention ethical standards: If a participant, and more strikingly a minor, reports multiple suicide attempts and this is an honest representation of their mental state, authorities should be involved.

The most charitable interpretation of this paper’s causal link is that it only applies to their strange sample but definitely not to the population of gender distressed young people as a whole.

An Old Trick: Don’t mention any inconsistent findings in the abstract

Most journalists will not read more than the abstract or maybe the introduction of a newly published paper. In a contentious field like this, it is serious journalistic neglect. The main finding of the paper was that suicide attempts in the past year of TGBN youth increased by 7-72 % (notice the range by the way) in states that passed so-called ‘anti-transgender legislation’ compared to states that did not do so. The main graph showing this result is the following:

However, when you keep reading, you come across this graph but now replacing “suicide attempts” with “seriously considering suicide” as an outcome:

These graphs show the result that as a result of passing the laws, within 3 years less people have seriously considered suicide than in states that are ‘trans-friendly’. These two findings cannot be easily reconciled because they point into different directions. How can more people attempt suicide as a result while simultaneously less people actually consider attempting suicide? The authors know that this is difficult to reconcile but use a cheap way out: They just simply refer to the technical fact that the dot at -1 on the x-axis is below the dotted line which would indicate that suicidal ideation was on different trajectories before the passing of the laws and this somewhat invalidates the results. While it is correct that such a case is a violation of the assumption of “parallel pre-treatment evolution” required for causality in difference-in-difference analyses, it still holds that this outcome contradicts the main finding. In any serious social science seminar, now a presenting researcher would be put under serious inquisition as how you can still sell such hyperbolic messages when results contradict each other. Any serious researcher would now do everything he can to find other more convincing data sources or otherwise seriously revise the paper. But it remains a privilege of trans-exceptionalism that such research makes in into the most prestigious journals of academia.

The tragic thing is that news outlets typically just copy and paste messages from the abstract or introduction and so the inconsistencies remain undetected and the questionable findings propagate through news rooms into policymakers’ offices.

The ‘anti-trans’ legislation in question: Separating sports according to sex leads to suicide. Really?

When you hear about the ‘anti-transgender’ bills having been passed, it is quite natural to think immediately of the restrictions a lot of Republican states put on medical ‘gender-affirming care’ for minors. Interestingly, these are not really the bills the authors of this study claim to massively increase suicide attempts. Although these type of bills take a prominent place in the introduction, only on page 9 (when most people have stopped reading), the authors describe the relevant laws that have been enacted. Out of the 48 laws that are supposed to make TGNB youth want to kill themselves:

30 “discriminate against transgender individuals in regard to participation in sports” (p.9), in other words base participation in sports according to biological sex, not gender identity

7 restrict access to “gender-affirming healthcare” (p.9)

4 restrict participation in school activities (no further explanation given)

3 are bathroom bills, i.e. natal boys not allowed to use the girls’ bathroom

1 is about transgender people not being protected from religious-based discrimination (I suppose, a Christian baker not wanting to bake a transflag-colored cake and being allowed to refuse service)

So in essence, mostly barring boys who identify as girls from playing on girl’s teams leads to 7-72 % more suicide attempts. Put into real numbers, the sample mean of past year suicide attempts was 0.539 which means that roughly every second person in this survey has attempted suicide. So factoring in the effect of the laws, this number should go up to 0.57673-0.9271 (7-72 % range). So, in the most extreme case, almost every person attempted suicide just because they had go play soccer with their natal sex, not the one they wished to. Clinically speaking, this seems to be an unreasonably large estimate. Given that a certain number of suicide attempts unfortunately are successful and overall child suicide is fortuntately very low, this would show up in suicide statistics. I am no expert on these statistics, but if you find such extreme estimates, your academic integrity should force you - and more importantly the duty of peer reviewers to remind the authors - to pull up other official statistics that back up these findings. It is also striking that the suicide attempt statistic is never put into perspective with other studies on suicide of TGNB youth which suggest that while suicidality in this population is elevated, it is comparable to youth with other mental comorbidities.

If you’re well-meaning towards the authors and the journal, this is simply neglect. If you’re insinuating that the authors just simply did not want other evidence to destroy their story, it’s a deep lack of integrity and scientific ethos. Importantly, this applies to the journal editors and peer-reviewers because it would have been their job to ask the authors to back up their findings with alternative, more reliabled data.

Incomplete literature review and forbidden conclusions

Normally, a topic so contentious and difficult as suicide of gender-distressed youth would require a very careful discussion of the existing literature to just get a feeling about the phenomenon itself. However, the authors do not cite or mention any of the recent studies that put the suicide question into perspective. There is no shortage of them: A large study of gender clinics across the UK, Canada and the Netherlands showed that rates of suicidality among gender distressed youths are higher than in the overall population but similar to young people with other mental comorbidities4. A study of the English Gender Identity Development Service (GIDS) provided a similar result whereas a longitudinal study from Finland5 showed that receiving a diagnosis of gender dysphoria does not seem to be associated with suicide mortality6. Most prominently, the Cass Review looked at completed suicides within GIDS and found that suicide was extremely rare and only occurred when the patients suffered from other deep and complex mental comorbidities7. All these studies, of which none is being referred to, suggest that the topic of suicide of gender distressed children should be treated just the way suicide is treated in any other mental health condition. Moreover, it should be made clear that there is a substantial difference between suicide attempts and death by suicide as pointed out by the studies cited above. Also, in the final paragraph the authors suggest that the result is symmetric. That is, while more conservative policies endanger lives, advancing pro-trans policies including gender-affirming care for minors, is life saving. But the former does not directly imply the latter simply because these are two different sets of policies. And as the Cass Review, so far the only systematic review of evidence, points out: There is no evidence that the latter is true.

Confirmation bias

All of the authors in this study are currently or formerly employed at the Trevor Project, a US based charity that provides “Crisis Services to LGTBQ+ young people 24/7”, public education and advocacy on anything related to LGBTQ+ youth. The charity continuously pushes the claim that gender-affirming care for minors “saves lives” by reducing suicidal ideation. This claim which generally bases on very poor research and has been widely debunked by the Cass Review. Hence, it’s a charity with a noble aim relying on un-evidenced and potentially harmful methods to get there. To be fair, we all have our biases even when we are dedicated to serious research. If the study was using excellent data, represented all findings in a balanced manner and all remaining flaws were clearly named in the introduction, the authors’ ideological alignment itself would not be a problem. But given the substantial flaws of this study, it matters. It matters because not mentioning the flaws suggests that it was all about confirmation bias to begin with.

Conclusion: Bad Science is worse than no science

While not exhaustive, I’ve outlined several reasons why this study has serious flaws. The most concerning aspect is its claim to scientific rigor, using terms like ‘causal,’ highlighting large sample sizes, and employing advanced statistical techniques, all while presenting its findings as groundbreaking. Unlike much of the prior research in gender studies, the issues with this study aren’t immediately obvious to those without a background in social science research— and that’s precisely the danger. It takes several hours to uncover the flaws, but once you do, they are substantial But in that time, it has already made its way through newsrooms and solidified the notion that any legislation not approved by trans rights organization can kill vulnerable children. In any serious doctoral program in social science you could not have used a paper like this for your doctoral dissertation. But in this exceptional case of institutional capture, it’s enough to get published in a prestigious journal.

https://www.nature.com/articles/s41562-024-01979-5.epdf?sharing_token=EbX7LsH7-AF5n99850vpnNRgN0jAjWel9jnR3ZoTv0PNveFlXHsicuqelg3jvg1Wcsju1CXHxspC9onbX6frEcU1-J5M25Ml5piLTNjBr959LGK7ejPr20VtTVSb18ArMlJnGNGgZYyU9CJQoJuUjN01H4VVGluDqO_epnWIg_A%3D

Card, David, and Alan B. Krueger. "Minimum wages and employment: A case study of the fast food industry in New Jersey and Pennsylvania." (1993).

https://www.thetrevorproject.org/survey-2022/#suicide-by-gender

de Graaf, Nastasja M., et al. "Suicidality in clinic-referred transgender adolescents." European Child & Adolescent Psychiatry (2022): 1-17.

Biggs, Michael. "Suicide by clinic-referred transgender adolescents in the United Kingdom." Archives of sexual behavior 51.2 (2022): 685-690.

Ruuska, Sami-Matti, et al. "All-cause and suicide mortalities among adolescents and young adults who contacted specialised gender identity services in Finland in 1996–2019: a register study." BMJ Ment Health 27.1 (2024).

https://cass.independent-review.uk/home/publications/final-report/

The authors state in their description of Eqn (1) that "The robust standard errors are clustered at the state level (s)." Any idea how to prove that they did not actually do so?